<ramble>

Partiality is inescapable:

-

Roads, bridges and cranes have maximum load/weight limits - exceeding them results in damage and possibly collapse.

-

Water and gas pipes (along with submersibles) have maximum pressure limits, with similar consequences when exceeded.

-

Insulated electrical cables and electronic components have maximum voltage limits.

-

Even superconductors have maximum limits on electrical current.

So this obsesession fascination with totality for software completely eludes me - even if you could stomp every last bug out of existence from your programs using types, the Universe in which said programs reside contains cosmic radiation which can not only change nucleotides, but bits of information as well (be it data or code). What was bug-free yesterday could still crash tomorrow.

“But it makes the maths easier!” So would pretending zero doesn’t exist. But it does:

- Place one bead in a bowl.

- Take one bead from same bowl.

- How many beads are left in that bowl?

Zero (and perhaps an empty round container was the inspiration for its numerical/symbolic representation, 0).

There’s also the bemusing attempts to avoid the problems associated with the actual existence of zero:

Static types are alright when you’re not having to constantly type them out - it’s so much nicer to just write:

midpoint x y = (x + y) / 2

instead of:

double midpoint (double x, double y) { return (x + y) / 2; }

But now the goal is to explicate every last detail of what the definitions in our programs should do using types, to the point of implementing fully-formed proofs! So what prevents bugs from sneaking into said types and proofs (particularly as Haskell’s type system has been Turing-complete since the advent of functional dependencies)? It’s just extra code; the bugs aren’t fussy!

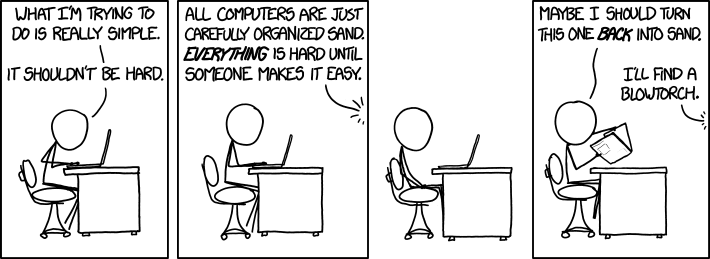

If too many perceive Haskell as “being hard” (e.g. poor benefit/cost ratio), then the blowtorch is optional: there are many other programming languages out there:

-

old ones:

-

and new:

</ramble>